W↓

All docs

🔑

Sign Up/Sign In

electric-sql.com/docs/api

Public Link

Apr 8, 2025, 9:38:21 AM - complete - 42.2 kB

Created by:

****ad@vlad.studio

Starting URLs:

https://electric-sql.com/docs/api/http

Crawl Prefixes:

https://electric-sql.com/docs/api

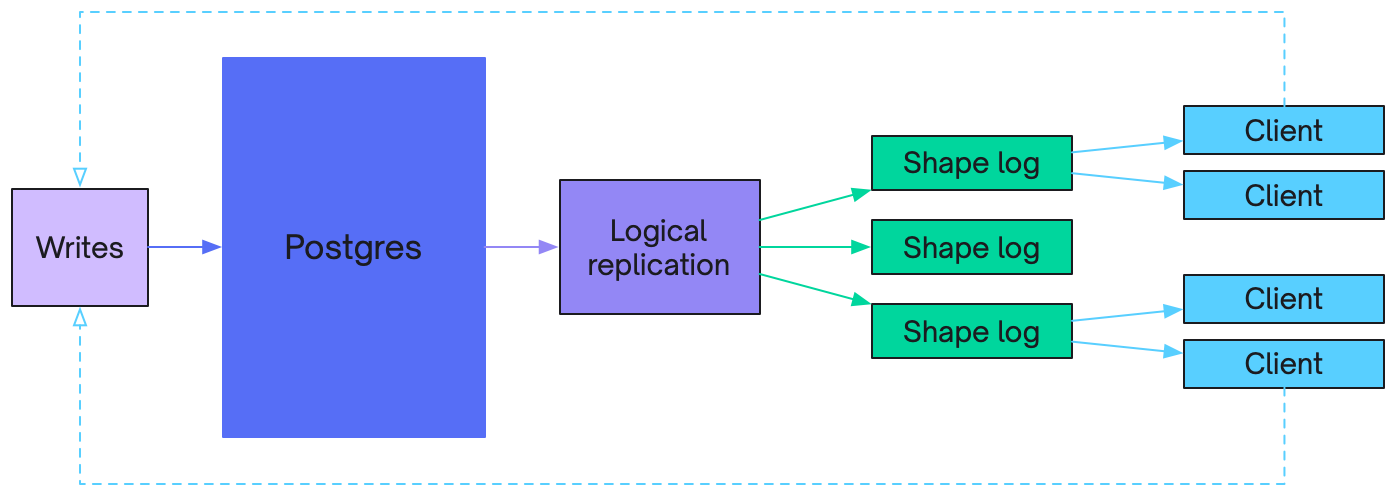

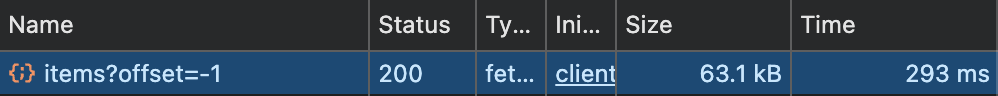

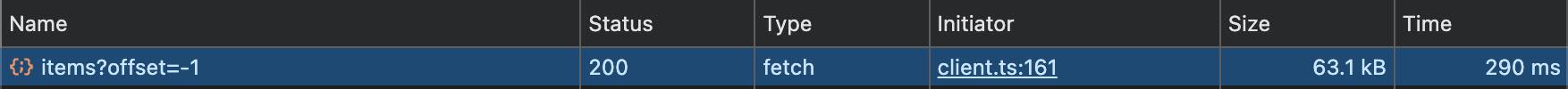

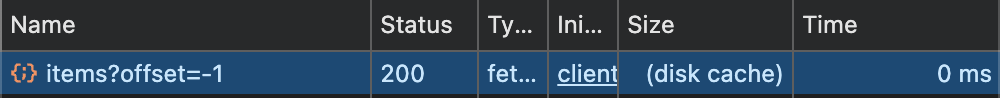

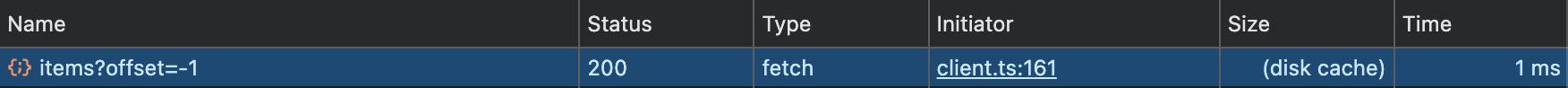

## Page: https://electric-sql.com/docs/api/http ## HTTP API The HTTP API is the primary, low level API for syncing data with Electric. ## HTTP API specification API documentation is published as an OpenAPI specification: * download the specification file to view or use with other OpenAPI tooling * view the HTML documentation generated using Redocly The rest of this page will describe the features of the API. 💡 If you haven't already, you may like to walkthrough the Quickstart to get a feel for using the HTTP API. ## Syncing shapes The API allows you to sync Shapes of data out of Postgres using the `GET /v1/shape` endpoint. The pattern is as follows. First you make an initial sync request to get the current data for the Shape, such as: sh curl -i 'http://localhost:3000/v1/shape?table=foo&offset=-1' Then you switch into a live mode to use long-polling to receive real-time updates. We'll go over these steps in more detail below. First a note on the data that the endpoint returns. ### Shape Log When you sync a shape from Electric, you get the data in the form of a log of logical database operations. This is the **Shape Log**. The `offset` that you see in the messages and provide as the `?offset=...` query parameter in your request identifies a position in the log. The messages you see in the response are shape log entries (the ones with `value`s and `action` headers) and control messages (the ones with `control` headers). The Shape Log is similar conceptually to the logical replication stream from Postgres. Except that instead of getting all the database operations, you're getting the ones that affect the data in your Shape. It's then the responsibility of the client to consume the log and materialize out the current value of the shape.  Shape log flow diagramme. The values included in the shape log are strings formatted according to Postgres' display settings. The OpenAPI specification defines the display settings the HTTP API adheres to. ### Initial sync request When you make an initial sync request, with `offset=-1`, you're telling the server that you want the whole log, from the start for a given shape. When a shape is first requested, Electric queries Postgres for the data and populates the log by turning the query results into insert operations. This allows you to sync shapes without having to pre-define them. Electric then streams out the log data in the response. Sometimes a log can fit in a single response. Sometimes it's too big and requires multiple requests. In this case, the first request will return a batch of data and an `electric-offset` header. An HTTP client should then continue to make requests setting the `offset` parameter to this header value. This allows the client to paginate through the shape log until it has received all of the current data. ### Control messages The client will then receive an `up-to-date` control message at the end of the response data: json {"headers": {"control": "up-to-date"}} This indicates that the client has all the data that the server was aware of when fulfilling the request. The client can then switch into live mode to receive real-time updates. Must-refetch Note that the other control message is `must-refetch` which indicates that the client must throw away their local shape data and re-sync from scratch: json {"headers": {"control": "must-refetch"}} ### Live mode Once a client is up-to-date, it can switch to live mode to receive real-time updates, by making requests with `live=true`, an `offset` and a shape `handle`, e.g.: sh curl -i 'http://localhost:3000/v1/shape?table=foo&live=true&handle=3833821-1721812114261&offset=0_0' The `live` parameter puts the server into live mode, where it will hold open the connection, waiting for new data arrive. This allows you to implement a long-polling strategy to consume real-time updates. The server holds open the request until either a timeout (returning `200` with only an up-to-date message) or when new data is available, which it sends back as the response. The client then reconnects and the server blocks again for new content. This way the client is always updated as soon as new data is available. ### Clients The algorithm for consuming the HTTP API described above can be implemented from scratch for your application. Howerver, it's typically implemented by clients that can be re-used and provide a simpler interface for application code. There are a number of existing clients, such as the TypeScript and Elixir clients. If one doesn't exist for your language or environment, we hope that the pattern is simple enough that you should be able to write your own client relatively easily. ## Caching HTTP API responses contain cache headers, including `cache-control` with `max-age` and `stale-age` and `etag`. These work out-of-the-box with caching proxies, such as Nginx, Caddy or Varnish, or a CDN like Cloudflare or Fastly. There are three aspects to caching: 1. accelerating initial sync 2. caching in the browser 3. collapsing live requests ### Accelerating initial sync When a client makes a `GET` request to fetch shape data at a given `offset`, the response can be cached. Subsequent clients requesting the same data can be served from the proxy or CDN. This removes load from Electric (and from Postrgres) and allows data to be served extremely quickly, at the edge by an optimised CDN. You can see an example Nginx config at packages/sync-service/dev/nginx.conf: nginx worker_processes 1; events { worker_connections 1024; } http { include mime.types; default_type application/octet-stream; # Enable gzip gzip on; gzip_types text/plain text/css application/javascript image/svg+xml application/json; gzip_min_length 1000; gzip_vary on; # Enable caching proxy_cache_path /var/cache/nginx levels=1:2 keys_zone=my_cache:10m max_size=1g inactive=60m use_temp_path=off; server { listen 3002; location / { proxy_pass http://host.docker.internal:3000; proxy_set_header Host $host; proxy_set_header X-Real-IP $remote_addr; proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for; proxy_set_header X-Forwarded-Proto $scheme; # Enable caching proxy_cache my_cache; proxy_cache_revalidate on; proxy_cache_min_uses 1; proxy_cache_methods GET HEAD; proxy_cache_use_stale error timeout; proxy_cache_background_update on; proxy_cache_lock on; # Add proxy cache status header add_header X-Proxy-Cache $upstream_cache_status; add_header X-Cache-Date $upstream_http_date; } } } ### Caching in the browser Requests are also designed to be cached by the browser. This allows apps to cache and avoid re-fetching data. For example, say a page loads data by syncing a shape.  The next time the user navigates to the same page, the data is in the browser file cache.  This can make data access instant and available offline, even without using a persistent local store. ### Collapsing live requests Once a client has requested the initial data for a shape, it switches into live mode, using long polling to wait for new data. When new data arrives, the client reconnects to wait for more data, and so on. Most caching proxies and CDNs support a feature called request collapsing (sometimes also called request coalescing). This identifies requests to the same resource, queues them on a waiting list, and only sends a single request to the origin. Electric takes advantage of this to optimise realtime delivery to large numbers of concurrent clients. Instead of Electric holding open a connection per client, this is handled at the CDN level and allows us to coalesce concurrent long-polling requests in live mode. This is how Electric can support millions of concurrent clients with minimal load on the sync service and no load on the source Postgres. --- ## Page: https://electric-sql.com/docs/api/clients/typescript The TypeScript client is a higher-level client interface that wraps the HTTP API to make it easy to sync Shapes in the web browser and other JavaScript environments. Defined in packages/typescript-client, it provides a ShapeStream primitive to subscribe to a change stream and a Shape primitive to get the whole shape whenever it changes. ## Install The client is published on NPM as `@electric-sql/client`: sh npm i @electric-sql/client ## How to use The client exports: * a `ShapeStream` class for consuming a Shape Log; and * a `Shape` class for materialising the log stream into a shape object These compose together, e.g.: ts import { ShapeStream, Shape } from '@electric-sql/client' const stream = new ShapeStream({ url: `http://localhost:3000/v1/shape`, params: { table: 'items' } }) const shape = new Shape(stream) // The callback runs every time the Shape data changes. shape.subscribe(data => console.log(data)) ### ShapeStream The `ShapeStream` is a low-level primitive for consuming a Shape Log. Construct with a shape definition and options and then either subscribe to the shape log messages directly or pass into a `Shape` to materialise the stream into an object. tsx import { ShapeStream } from '@electric-sql/client' // Passes subscribers rows as they're inserted, updated, or deleted const stream = new ShapeStream({ url: `http://localhost:3000/v1/shape`, params: { table: `foo` } }) stream.subscribe(messages => { // messages is an array with one or more row updates // and the stream will wait for all subscribers to process them // before proceeding }) #### Options The `ShapeStream` constructor takes the following options: ts /** * Options for constructing a ShapeStream. */ export interface ShapeStreamOptions<T = never> { /** * The full URL to where the Shape is hosted. This can either be the Electric * server directly or a proxy. E.g. for a local Electric instance, you might * set `http://localhost:3000/v1/shape` */ url: string /** * PostgreSQL-specific parameters for the shape. * This includes table, where clause, columns, and replica settings. */ params: { /** * The root table for the shape. */ table: string /** * The where clauses for the shape. */ where?: string /** * Positional where clause paramater values. These will be passed to the server * and will substitute `$i` parameters in the where clause. * * It can be an array (note that positional arguments start at 1, the array will be mapped * accordingly), or an object with keys matching the used positional parameters in the where clause. * * If where clause is `id = $1 or id = $2`, params must have keys `"1"` and `"2"`, or be an array with length 2. */ params?: Record<`${number}`, string> | string[] /** * The columns to include in the shape. * Must include primary keys, and can only include valid columns. */ columns?: string[] /** * If `replica` is `default` (the default) then Electric will only send the * changed columns in an update. * * If it's `full` Electric will send the entire row with both changed and * unchanged values. `old_value` will also be present on update messages, * containing the previous value for changed columns. * * Setting `replica` to `full` will obviously result in higher bandwidth * usage and so is not recommended. */ replica?: Replica /** * Additional request parameters to attach to the URL. * These will be merged with Electric's standard parameters. */ [key: string]: string | string[] | undefined } /** * The "offset" on the shape log. This is typically not set as the ShapeStream * will handle this automatically. A common scenario where you might pass an offset * is if you're maintaining a local cache of the log. If you've gone offline * and are re-starting a ShapeStream to catch-up to the latest state of the Shape, * you'd pass in the last offset and shapeId you'd seen from the Electric server * so it knows at what point in the shape to catch you up from. */ offset?: Offset /** * Similar to `offset`, this isn't typically used unless you're maintaining * a cache of the shape log. */ shapeId?: string /** * HTTP headers to attach to requests made by the client. * Can be used for adding authentication headers. */ headers?: Record<string, string> /** * Automatically fetch updates to the Shape. If you just want to sync the current * shape and stop, pass false. */ subscribe?: boolean /** * Signal to abort the stream. */ signal?: AbortSignal /** * Custom fetch client implementation. */ fetchClient?: typeof fetch /** * Custom parser for handling specific Postgres data types. */ parser?: Parser<T> /** * A function for handling errors. * This is optional, when it is not provided any shapestream errors will be thrown. * If the function returns an object containing parameters and/or headers * the shapestream will apply those changes and try syncing again. * If the function returns void the shapestream is stopped. */ onError?: ShapeStreamErrorHandler backoffOptions?: BackoffOptions } type RetryOpts = { params?: ParamsRecord headers?: Record<string, string> } type ShapeStreamErrorHandler = ( error: Error ) => void | RetryOpts | Promise<void | RetryOpts> Note that certain parameter names are reserved for Electric's internal use and cannot be used in custom params: * `offset` * `handle` * `live` * `cursor` * `source_id` The following PostgreSQL-specific parameters should be included within the `params` object: * `table` - The root table for the shape * `where` - SQL where clause for filtering rows * `params` - Values for positional parameters in the where clause (e.g. `$1`) * `columns` - List of columns to include * `replica` - Controls whether to send full or partial row updates Example with PostgreSQL-specific parameters: typescript const stream = new ShapeStream({ url: 'http://localhost:3000/v1/shape', params: { table: 'users', where: 'age > $1', columns: ['id', 'name', 'email'], params: ["18"], replica: 'full' } }) You can also include additional custom parameters in the `params` object alongside the PostgreSQL-specific ones: typescript const stream = new ShapeStream({ url: 'http://localhost:3000/v1/shape', params: { table: 'users', customParam: 'value' } }) #### Dynamic Options Both `params` and `headers` support function options that are resolved when needed. These functions can be synchronous or asynchronous: typescript const stream = new ShapeStream({ url: 'http://localhost:3000/v1/shape', params: { table: 'items', userId: () => getCurrentUserId(), filter: async () => await getUserPreferences() }, headers: { 'Authorization': async () => `Bearer ${await getAccessToken()}`, 'X-Tenant-Id': () => getCurrentTenant() } }) Function options are resolved in parallel, making this pattern efficient for multiple async operations like fetching auth tokens and user context. Common use cases include: * Authentication tokens that need to be refreshed * User-specific parameters that may change * Dynamic filtering based on current state * Multi-tenant applications where context determines the request #### Messages A `ShapeStream` consumes and emits a stream of messages. These messages can either be a `ChangeMessage` representing a change to the shape data: ts export type ChangeMessage<T extends Row<unknown> = Row> = { key: string value: T old_value?: Partial<T> // Only provided for updates if `replica` is `full` headers: Header & { operation: `insert` | `update` | `delete` } offset: Offset } Or a `ControlMessage`, representing an instruction to the client, as documented here. #### Parsing and Custom Parsing To understand the type of each column in your shape, you can check the `electric-schema` response header in the shape response. This header contains the PostgreSQL type information for each column. By default, when constructing a `ChangeMessage.value`, `ShapeStream` parses the following Postgres types into native JavaScript values: * `int2`, `int4`, `float4`, and `float8` are parsed into JavaScript `Number` * `int8` is parsed into a JavaScript `BigInt` * `bool` is parsed into a JavaScript `Boolean` * `json` and `jsonb` are parsed into JavaScript values/arrays/objects using `JSON.parse` * Postgres Arrays are parsed into JavaScript arrays, e.g. `"{{1,2},{3,4}}"` is parsed into `[[1,2],[3,4]]` All other types aren't parsed and are left in the string format as they were served by the HTTP endpoint. You can extend the default parsing behavior by defining custom parsers for specific PostgreSQL data types. This is particularly useful when you want to transform string representations of dates, JSON, or other complex types into their corresponding JavaScript objects. Here's an example: ts // Define row type type CustomRow = { id: number title: string created_at: Date // We want this to be a Date object } const stream = new ShapeStream<CustomRow>({ url: 'http://localhost:3000/v1/shape', params: { table: 'posts' }, parser: { // Parse timestamp columns into JavaScript Date objects timestamptz: (date: string) => new Date(date) } }) const shape = new Shape(stream) shape.subscribe(data => { console.log(data.created_at instanceof Date) // true }) #### Replica full By default Electric sends the modified columns in an update message, not the complete row. To be specific: * an `insert` operation contains the full row * an `update` operation contains the primary key column(s) and the changed columns * a `delete` operation contains just the primary key column(s) If you'd like to receive the full row value for updates and deletes, you can set the `replica` option of your `ShapeStream` to `full`: tsx import { ShapeStream } from "@electric-sql/client" const stream = new ShapeStream({ url: `http://localhost:3000/v1/shape`, params: { table: `foo`, replica: `full` } }) When using `replica=full`, the returned rows will include: * on `insert` the new value in `msg.value` * on `update` the new value in `msg.value` and the previous value in `msg.old_value` for any changed columns - the full previous state can be reconstructed by combining the two * on `delete` the full previous value in `msg.value` This is less efficient and will use more bandwidth for the same shape (especially for tables with large static column values). Note also that shapes with different `replica` settings are distinct, even for the same table and where clause combination. #### Authentication with Dynamic Tokens When working with authentication tokens that need to be refreshed, the recommended approach is to use a function-based header: ts const stream = new ShapeStream({ url: 'http://localhost:3000/v1/shape', params: { table: 'items' }, headers: { 'Authorization': async () => `Bearer ${await getToken()}` }, onError: async (error) => { if (error instanceof FetchError && error.status === 401) { // Force token refresh await refreshToken() // Return empty object to trigger a retry with the new token // that will be fetched by our function-based header return {} } // Rethrow errors we can't handle throw error } }) This approach automatically handles token refresh as the function is called each time a request is made. You can also combine this with an error handler for more complex scenarios. ### Shape The `Shape` is the main primitive for working with synced data. It takes a `ShapeStream`, consumes the stream, materialises it into a Shape object and notifies you when this changes. tsx import { ShapeStream, Shape } from '@electric-sql/client' const stream = new ShapeStream({ url: `http://localhost:3000/v1/shape`, params: { table: `foo` } }) const shape = new Shape(stream) // Returns promise that resolves with the latest shape data once it's fully loaded await shape.rows // passes subscribers shape data when the shape updates shape.subscribe(({ rows }) => { // rows is an array of the latest value of each row in a shape. }) ### Subscribing to updates The `subscribe` method allows you to receive updates whenever the shape changes. It takes two arguments: 1. A message handler callback (required) 2. An error handler callback (optional) typescript const stream = new ShapeStream({ url: 'http://localhost:3000/v1/shape', params: { table: 'issues' } }) // Subscribe to both message and error handlers stream.subscribe( (messages) => { // Process messages console.log('Received messages:', messages) }, (error) => { // Get notified about errors console.error('Error in subscription:', error) } ) You can have multiple active subscriptions to the same stream. Each subscription will receive the same messages, and the stream will wait for all subscribers to process their messages before proceeding. To stop receiving updates, you can either: * Unsubscribe a specific subscription using the function returned by `subscribe` * Unsubscribe all subscriptions using `unsubscribeAll()` typescript // Store the unsubscribe function const unsubscribe = stream.subscribe(messages => { console.log('Received messages:', messages) }) // Later, unsubscribe this specific subscription unsubscribe() // Or unsubscribe all subscriptions stream.unsubscribeAll() ### Error Handling The ShapeStream provides two ways to handle errors: 1. Using the `onError` handler (recommended): typescript const stream = new ShapeStream({ url: 'http://localhost:3000/v1/shape', params: { table: 'issues' }, onError: (error) => { // Handle all stream errors here if (error instanceof FetchError) { console.error('HTTP error:', error.status, error.message) } else { console.error('Stream error:', error) } } }) If no `onError` handler is provided, the ShapeStream will throw errors that occur during streaming. 2. Individual subscribers can optionally handle errors specific to their subscription: typescript stream.subscribe( (messages) => { // Process messages }, (error) => { // Handle errors for this specific subscription console.error('Subscription error:', error) } ) #### Error Types The following error types may be encountered: **Initialization Errors** (thrown by constructor): * `MissingShapeUrlError`: Missing required URL parameter * `InvalidSignalError`: Invalid AbortSignal instance * `ReservedParamError`: Using reserved parameter names **Runtime Errors** (handled by `onError` or thrown): * `FetchError`: HTTP errors during shape fetching * `FetchBackoffAbortError`: Fetch aborted using AbortSignal * `MissingShapeHandleError`: Missing required shape handle * `ParserNullValueError`: Parser encountered NULL value in a column that doesn't allow NULL values See the Demos and integrations for more usage examples. --- ## Page: https://electric-sql.com/docs/api/clients/elixir Electric provides an Elixir client that wraps the HTTP API into a higher-level stream interface and a Phoenix integration that adds sync to your Phoenix application. ## How to use The `Electric.Client` library allows you to stream Shapes into your Elixir application. It's published to Hex as the `electric_client` package. ### Stream The client exposes a `stream/3` that streams a Shape Log into an `Enumerable`: elixir Mix.install([:electric_client]) {:ok, client} = Electric.Client.new(base_url: "http://localhost:3000") stream = Electric.Client.stream(client, "my_table", where: "something = true") stream |> Stream.each(&IO.inspect/1) |> Stream.run() You can materialise the shape stream into a variety of data structures. For example by matching on insert, update and delete operations and applying them to a Map or an Ecto struct. (See the Redis example example and Typescript Shape class for reference). ### Ecto queries The `stream/3` function also supports deriving the shape definition from an `Ecto.Query`: elixir import Ecto.Query, only: [from: 2] query = from(t in MyTable, where: t.something == true) stream = Electric.Client.stream(client, query) See the documentation at hexdocs.pm/electric\_client for more details. ## Phoenix integration Electric also provides an `Electric.Phoenix` integration allows you to: * sync data into a front-end app from a Postgres-backed Phoenix application; and * add real-time streaming from Postgres into Phoenix LiveView via Phoenix.Streams See the Phoenix framework integration page for more details. --- ## Page: https://electric-sql.com/docs/api/config ## Sync service configuration This page documents the config options for self-hosting the Electric sync engine. Advanced only You don't need to worry about this if you're using Electric Cloud. Also, the only required configuration is `DATABASE_URL`. ## Configuration The sync engine is an Elixir application developed at packages/sync-service and published as a Docker image at electricsql/electric. Configuration options can be provided as environment variables, e.g.: shell docker run \ -e "DATABASE_URL=postgresql://..." \ -e "ELECTRIC_DB_POOL_SIZE=10" \ -p 3000:3000 \ electricsql/electric These are passed into the application via config/runtime.exs. ## Database ### DATABASE\_URL <table><tbody><tr><td>Variable</td><td><code>DATABASE_URL</code><span>required</span></td></tr><tr><td>Description</td><td><p>Postgres connection string. Used to connect to the Postgres database.</p><p>The connection string must be in the <a href="https://www.postgresql.org/docs/current/libpq-connect.html#LIBPQ-CONNSTRING-URIS" target="_blank" rel="noreferrer">libpg Connection URI format</a> of <code>postgresql://[userspec@][hostspec][/dbname][?sslmode=<sslmode>]</code>.</p><p>The <code>userspec</code> section of the connection string specifies the database user that Electric connects to Postgres as. They must have the <code>REPLICATION</code> role.</p><p>For a secure connection, set the <code>sslmode</code> query parameter to <code>require</code>.</p></td></tr><tr><td>Example</td><td><code>DATABASE_URL=postgresql://user:password@example.com:54321/electric</code></td></tr></tbody></table> ### ELECTRIC\_QUERY\_DATABASE\_URL <table><tbody><tr><td>Variable</td><td><code>ELECTRIC_QUERY_DATABASE_URL</code></td></tr><tr><td>Default</td><td><code>DATABASE_URL</code></td></tr><tr><td>Description</td><td><p>Postgres connection string. Used to connect to the Postgres database for anything but the replication, will default to the same as <code>DATABASE_URL</code> if not provided.</p><p>The connection string must be in the <a href="https://www.postgresql.org/docs/current/libpq-connect.html#LIBPQ-CONNSTRING-URIS" target="_blank" rel="noreferrer">libpg Connection URI format</a> of <code>postgresql://[userspec@][hostspec][/dbname][?sslmode=<sslmode>]</code>.</p><p>The <code>userspec</code> section of the connection string specifies the database user that Electric connects to Postgres as. This can point to a connection pooler and does not need a <code>REPLICATION</code> role as it does not handle the replication.</p><p>For a secure connection, set the <code>sslmode</code> query parameter to <code>require</code>.</p></td></tr><tr><td>Example</td><td><code>ELECTRIC_QUERY_DATABASE_URL=postgresql://user:password@example-pooled.com:54321/electric</code></td></tr></tbody></table> ### ELECTRIC\_DATABASE\_USE\_IPV6 <table><tbody><tr><td>Variable</td><td><code>ELECTRIC_DATABASE_USE_IPV6</code></td></tr><tr><td>Default</td><td><code>false</code></td></tr><tr><td>Description</td><td><p>Set to <code>true</code> to prioritise connecting to the database over IPv6. Electric will fall back to an IPv4 DNS lookup if the IPv6 lookup fails.</p></td></tr><tr><td>Example</td><td><code>ELECTRIC_DATABASE_USE_IPV6=true</code></td></tr></tbody></table> ### ELECTRIC\_DB\_POOL\_SIZE <table><tbody><tr><td>Variable</td><td><code>ELECTRIC_DB_POOL_SIZE</code></td></tr><tr><td>Default</td><td><code>20</code></td></tr><tr><td>Description</td><td><p>How many connections Electric opens as a pool for handling shape queries.</p></td></tr><tr><td>Example</td><td><code>ELECTRIC_DB_POOL_SIZE=10</code></td></tr></tbody></table> ### ELECTRIC\_REPLICATION\_STREAM\_ID <table><tbody><tr><td>Variable</td><td><code>ELECTRIC_REPLICATION_STREAM_ID</code></td></tr><tr><td>Default</td><td><code>default</code></td></tr><tr><td>Description</td><td><p>Suffix for the logical replication publication and slot name.</p></td></tr><tr><td>Example</td><td><code>ELECTRIC_REPLICATION_STREAM_ID=my-app</code></td></tr></tbody></table> ## Electric ### ELECTRIC\_SECRET <table><tbody><tr><td>Variable</td><td><code>ELECTRIC_SECRET</code><span>required</span></td></tr><tr><td>Description</td><td><p>Secret for shape requests to the <a href="https://electric-sql.com/docs/api/http">HTTP API</a>. This is required unless <code>ELECTRIC_INSECURE</code> is set to <code>true</code>. By default, the Electric API is public and authorises all shape requests against this secret. More details are available in the <a href="https://electric-sql.com/docs/guides/security">security guide</a>.</p></td></tr><tr><td>Example</td><td><code>ELECTRIC_SECRET=1U6ItbhoQb4kGUU5wXBLbxvNf</code></td></tr></tbody></table> ### ELECTRIC\_INSECURE <table><tbody><tr><td>Variable</td><td><code>ELECTRIC_INSECURE</code></td></tr><tr><td>Default</td><td><code>false</code></td></tr><tr><td>Description</td><td><p>When set to <code>true</code>, runs Electric in insecure mode and does not require an <code>ELECTRIC_SECRET</code>. Use with caution. API requests are unprotected and may risk exposing your database. Good for development environments. If used in production, make sure to <a href="https://electric-sql.com/docs/guides/security#network-security">lock down access</a> to Electric.</p></td></tr><tr><td>Example</td><td><code>ELECTRIC_INSECURE=true</code></td></tr></tbody></table> ### ELECTRIC\_INSTANCE\_ID <table><tbody><tr><td>Variable</td><td><code>ELECTRIC_INSTANCE_ID</code></td></tr><tr><td>Default</td><td><code>Electric.Utils.uuid4()</code></td></tr><tr><td>Description</td><td><p>A unique identifier for the Electric instance. Defaults to a randomly generated UUID.</p></td></tr><tr><td>Example</td><td><code>ELECTRIC_INSTANCE_ID=some-unique-instance-identifier</code></td></tr></tbody></table> ### ELECTRIC\_SERVICE\_NAME <table><tbody><tr><td>Variable</td><td><code>ELECTRIC_SERVICE_NAME</code></td></tr><tr><td>Default</td><td><code>electric</code></td></tr><tr><td>Description</td><td><p>Name of the electric service. Used as a resource identifier and namespace.</p></td></tr><tr><td>Example</td><td><code>ELECTRIC_SERVICE_NAME=my-electric-service</code></td></tr></tbody></table> ### ELECTRIC\_ENABLE\_INTEGRATION\_TESTING <table><tbody><tr><td>Variable</td><td><code>ELECTRIC_ENABLE_INTEGRATION_TESTING</code></td></tr><tr><td>Default</td><td><code>false</code></td></tr><tr><td>Description</td><td><p>Expose some unsafe operations that faciliate integration testing. Do not enable this in production.</p></td></tr><tr><td>Example</td><td><code>ELECTRIC_ENABLE_INTEGRATION_TESTING=true</code></td></tr></tbody></table> ### ELECTRIC\_LISTEN\_ON\_IPV6 <table><tbody><tr><td>Variable</td><td><code>ELECTRIC_LISTEN_ON_IPV6</code></td></tr><tr><td>Default</td><td><code>false</code></td></tr><tr><td>Description</td><td><p>By default, Electric binds to IPv4. Enable this to listen on IPv6 addresses as well.</p></td></tr><tr><td>Example</td><td><code>ELECTRIC_LISTEN_ON_IPV6=true</code></td></tr></tbody></table> ### ELECTRIC\_SHAPE\_CHUNK\_BYTES\_THRESHOLD <table><tbody><tr><td>Variable</td><td><code>ELECTRIC_SHAPE_CHUNK_BYTES_THRESHOLD</code></td></tr><tr><td>Default</td><td><code>10485760</code></td></tr><tr><td>Description</td><td><p>Limit the maximum size of a shape log response, to ensure they are cached by upstream caches. Defaults to 10MB (10 * 1024 * 1024).</p><p>See <a href="https://github.com/electric-sql/electric/issues/1581" target="_blank" rel="noreferrer">#1581</a> for context.</p></td></tr><tr><td>Example</td><td><code>ELECTRIC_SHAPE_CHUNK_BYTES_THRESHOLD=20971520</code></td></tr></tbody></table> ### ELECTRIC\_PORT <table><tbody><tr><td>Variable</td><td><code>ELECTRIC_PORT</code></td></tr><tr><td>Default</td><td><code>3000</code></td></tr><tr><td>Description</td><td><p>Port that the <a href="https://electric-sql.com/docs/api/http">HTTP API</a> is exposed on.</p></td></tr><tr><td>Example</td><td><code>ELECTRIC_PORT=8080</code></td></tr></tbody></table> ## Caching ### ELECTRIC\_CACHE\_MAX\_AGE <table><tbody><tr><td>Variable</td><td><code>ELECTRIC_CACHE_MAX_AGE</code></td></tr><tr><td>Default</td><td><code>60</code></td></tr><tr><td>Description</td><td><p>Default <code>max-age</code> for the cache headers of the HTTP API.</p></td></tr><tr><td>Example</td><td><code>ELECTRIC_CACHE_MAX_AGE=5</code></td></tr></tbody></table> ### ELECTRIC\_CACHE\_STALE\_AGE <table><tbody><tr><td>Variable</td><td><code>ELECTRIC_CACHE_STALE_AGE</code></td></tr><tr><td>Default</td><td><code>300</code></td></tr><tr><td>Description</td><td><p>Default <code>stale-age</code> for the cache headers of the HTTP API.</p></td></tr><tr><td>Example</td><td><code>ELECTRIC_CACHE_STALE_AGE=5</code></td></tr></tbody></table> ## Storage ### ELECTRIC\_PERSISTENT\_STATE <table><tbody><tr><td>Variable</td><td><code>ELECTRIC_PERSISTENT_STATE</code></td></tr><tr><td>Default</td><td><code>FILE</code></td></tr><tr><td>Description</td><td><p>Where to store shape metadata. Defaults to storing on the filesystem. If provided must be one of <code>MEMORY</code> or <code>FILE</code>.</p></td></tr><tr><td>Example</td><td><code>ELECTRIC_PERSISTENT_STATE=MEMORY</code></td></tr></tbody></table> ### ELECTRIC\_STORAGE <table><tbody><tr><td>Variable</td><td><code>ELECTRIC_STORAGE</code></td></tr><tr><td>Default</td><td><code>FILE</code></td></tr><tr><td>Description</td><td><p>Where to store shape logs. Defaults to storing on the filesystem. If provided must be one of <code>MEMORY</code> or <code>FILE</code>.</p></td></tr><tr><td>Example</td><td><code>ELECTRIC_STORAGE=MEMORY</code></td></tr></tbody></table> ### ELECTRIC\_STORAGE\_DIR <table><tbody><tr><td>Variable</td><td><code>ELECTRIC_STORAGE_DIR</code></td></tr><tr><td>Default</td><td><code>./persistent</code></td></tr><tr><td>Description</td><td><p>Path to root folder for storing data on the filesystem.</p></td></tr><tr><td>Example</td><td><code>ELECTRIC_STORAGE_DIR=/var/example</code></td></tr></tbody></table> ## Telemetry These environment variables allow configuration of metric and trace export for visibility into performance of the Electric instance. ### ELECTRIC\_OTLP\_ENDPOINT <table><tbody><tr><td>Variable</td><td><code>ELECTRIC_OTLP_ENDPOINT</code><span>optional</span></td></tr><tr><td>Description</td><td><p>Set an <a href="https://opentelemetry.io/docs/what-is-opentelemetry/" target="_blank" rel="noreferrer">OpenTelemetry</a> endpoint URL to enable telemetry.</p></td></tr><tr><td>Example</td><td><code>ELECTRIC_OTLP_ENDPOINT=https://example.com</code></td></tr></tbody></table> ### ELECTRIC\_OTEL\_DEBUG <table><tbody><tr><td>Variable</td><td><code>ELECTRIC_OTEL_DEBUG</code></td></tr><tr><td>Default</td><td><code>false</code></td></tr><tr><td>Description</td><td><p>Debug tracing by printing spans to stdout, without batching.</p></td></tr><tr><td>Example</td><td><code>ELECTRIC_OTEL_DEBUG=true</code></td></tr></tbody></table> ### ELECTRIC\_HNY\_API\_KEY <table><tbody><tr><td>Variable</td><td><code>ELECTRIC_HNY_API_KEY</code><span>optional</span></td></tr><tr><td>Description</td><td><p><a href="https://www.honeycomb.io/" target="_blank" rel="noreferrer">Honeycomb.io</a> api key. Specify along with <code>HNY_DATASET</code> to export traces directly to Honeycomb, without the need to run an OpenTelemetry Collector.</p></td></tr><tr><td>Example</td><td><code>ELECTRIC_HNY_API_KEY=your-api-key</code></td></tr></tbody></table> ### ELECTRIC\_HNY\_DATASET <table><tbody><tr><td>Variable</td><td><code>ELECTRIC_HNY_DATASET</code><span>optional</span></td></tr><tr><td>Description</td><td><p>Name of your Honeycomb Dataset.</p></td></tr><tr><td>Example</td><td><code>ELECTRIC_HNY_DATASET=your-dataset-name</code></td></tr></tbody></table> ### ELECTRIC\_PROMETHEUS\_PORT <table><tbody><tr><td>Variable</td><td><code>ELECTRIC_PROMETHEUS_PORT</code><span>optional</span></td></tr><tr><td>Description</td><td><p>Expose a prometheus reporter for telemetry data on the specified port.</p></td></tr><tr><td>Example</td><td><code>ELECTRIC_PROMETHEUS_PORT=9090</code></td></tr></tbody></table> ### ELECTRIC\_STATSD\_HOST <table><tbody><tr><td>Variable</td><td><code>ELECTRIC_STATSD_HOST</code><span>optional</span></td></tr><tr><td>Description</td><td><p>Enable sending telemetry data to a StatsD reporting endpoint.</p></td></tr><tr><td>Example</td><td><code>ELECTRIC_STATSD_HOST=https://example.com</code></td></tr></tbody></table> ## Logging ### ELECTRIC\_LOG\_LEVEL <table><tbody><tr><td>Variable</td><td><code>ELECTRIC_LOG_LEVEL</code><span>optional</span></td></tr><tr><td>Description</td><td><p>Verbosity of Electric's log output.</p><p>Available levels, in the order of increasing verbosity:</p><ul><li><code>error</code></li><li><code>warning</code></li><li><code>info</code></li><li><code>debug</code></li></ul></td></tr><tr><td>Example</td><td><code>ELECTRIC_LOG_LEVEL=debug</code></td></tr></tbody></table> ### ELECTRIC\_LOG\_COLORS <table><tbody><tr><td>Variable</td><td><code>ELECTRIC_LOG_COLORS</code><span>optional</span></td></tr><tr><td>Description</td><td><p>Enable or disable ANSI coloring of Electric's log output.</p><p>By default, coloring is enabled when Electric's stdout is connected to a terminal. This may be undesirable in certain runtime environments, such as AWS which displays ANSI color codes using escape sequences and may incorrectly split log entries into multiple lines.</p></td></tr><tr><td>Example</td><td><code>ELECTRIC_LOG_COLORS=false</code></td></tr></tbody></table> ### ELECTRIC\_LOG\_OTP\_REPORTS <table><tbody><tr><td>Variable</td><td><code>ELECTRIC_LOG_OTP_REPORTS</code></td></tr><tr><td>Default</td><td><code>false</code></td></tr><tr><td>Description</td><td><p>Enable <a href="https://www.erlang.org/doc/apps/sasl/sasl_app.html" target="_blank" rel="noreferrer">OTP SASL</a> reporting at runtime.</p></td></tr><tr><td>Example</td><td><code>ELECTRIC_LOG_OTP_REPORTS=true</code></td></tr></tbody></table> ## Usage reporting ### ELECTRIC\_USAGE\_REPORTING These environment variables allow configuration of anonymous usage data reporting back to https://electric-sql.com <table><tbody><tr><td>Variable</td><td><code>ELECTRIC_USAGE_REPORTING</code></td></tr><tr><td>Default</td><td><code>true</code></td></tr><tr><td>Description</td><td><p>Configure anonymous usage data about the instance being sent to a central checkpoint service. Collected information is anonymised and doesn't contain any information from the replicated data. You can read more about it in our <a href="https://electric-sql.com/docs/reference/telemetry#anonymous-usage-data">telemetry docs</a>.</p></td></tr><tr><td>Example</td><td><code>ELECTRIC_USAGE_REPORTING=true</code></td></tr></tbody></table>